3.3 — Omitted Variable Bias

ECON 480 • Econometrics • Fall 2021

Ryan Safner

Assistant Professor of Economics

safner@hood.edu

ryansafner/metricsF21

metricsF21.classes.ryansafner.com

Review: u

Yi=β0+β1Xi+ui

ui includes all other variables that affect Y

Every regression model always has omitted variables assumed in the error

- Most are unobservable (hence “u”)

- Examples: innate ability, weather at the time, etc

Again, we assume u is random, with E[u|X]=0 and var(u)=σ2u

Sometimes, omission of variables can bias OLS estimators (^β0 and ^β1)

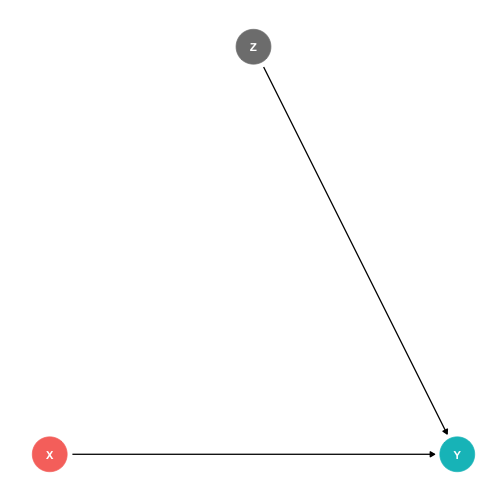

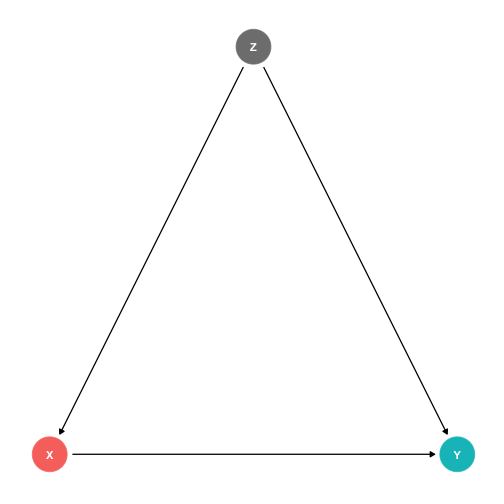

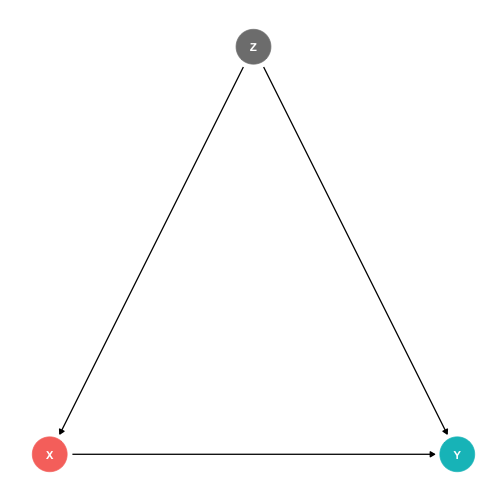

Omitted Variable Bias I

- Omitted variable bias (OVB) for some omitted variable Z exists if two conditions are met:

Omitted Variable Bias I

- Omitted variable bias (OVB) for some omitted variable Z exists if two conditions are met:

1. Z is a determinant of Y

- i.e. Z is in the error term, ui

Omitted Variable Bias I

- Omitted variable bias (OVB) for some omitted variable Z exists if two conditions are met:

1. Z is a determinant of Y

- i.e. Z is in the error term, ui

2. Z is correlated with the regressor X

- i.e. cor(X,Z)≠0

- implies cor(X,u)≠0

- implies X is endogenous

Omitted Variable Bias II

Omitted variable bias makes X endogenous

Violates zero conditional mean assumption E(ui|Xi)≠0⟹

- knowing Xi tells you something about ui (i.e. something about Y not by way of X)!

Omitted Variable Bias III

^β1 is biased: E[^β1]≠β1

^β1 systematically over- or under-estimates the true relationship (β1)

^β1 “picks up” both pathways:

- X→Y

- X←Z→Y

Omited Variable Bias: Class Size Example

Example: Consider our recurring class size and test score example: Test scorei=β0+β1STRi+ui

- Which of the following possible variables would cause a bias if omitted?

Omited Variable Bias: Class Size Example

Example: Consider our recurring class size and test score example: Test scorei=β0+β1STRi+ui

- Which of the following possible variables would cause a bias if omitted?

- Zi: time of day of the test

Omited Variable Bias: Class Size Example

Example: Consider our recurring class size and test score example: Test scorei=β0+β1STRi+ui

- Which of the following possible variables would cause a bias if omitted?

Zi: time of day of the test

Zi: parking space per student

Omited Variable Bias: Class Size Example

Example: Consider our recurring class size and test score example: Test scorei=β0+β1STRi+ui

- Which of the following possible variables would cause a bias if omitted?

Zi: time of day of the test

Zi: parking space per student

Zi: percent of ESL students

Recall: Endogeneity and Bias

- (Recall): the true expected value of ^β1 is actually:†

E[^β1]=β1+cor(X,u)σuσX

Recall: Endogeneity and Bias

- (Recall): the true expected value of ^β1 is actually:†

E[^β1]=β1+cor(X,u)σuσX

1) If X is exogenous: cor(X,u)=0, we're just left with β1

Recall: Endogeneity and Bias

- (Recall): the true expected value of ^β1 is actually:†

E[^β1]=β1+cor(X,u)σuσX

1) If X is exogenous: cor(X,u)=0, we're just left with β1

2) The larger cor(X,u) is, larger bias: (E[^β1]−β1)

Recall: Endogeneity and Bias

- (Recall): the true expected value of ^β1 is actually:†

E[^β1]=β1+cor(X,u)σuσX

1) If X is exogenous: cor(X,u)=0, we're just left with β1

2) The larger cor(X,u) is, larger bias: (E[^β1]−β1)

3) We can “sign” the direction of the bias based on cor(X,u)

- Positive cor(X,u) overestimates the true β1 (^β1 is too large)

- Negative cor(X,u) underestimates the true β1 (^β1 is too small)

† See 2.4 class notes for proof.

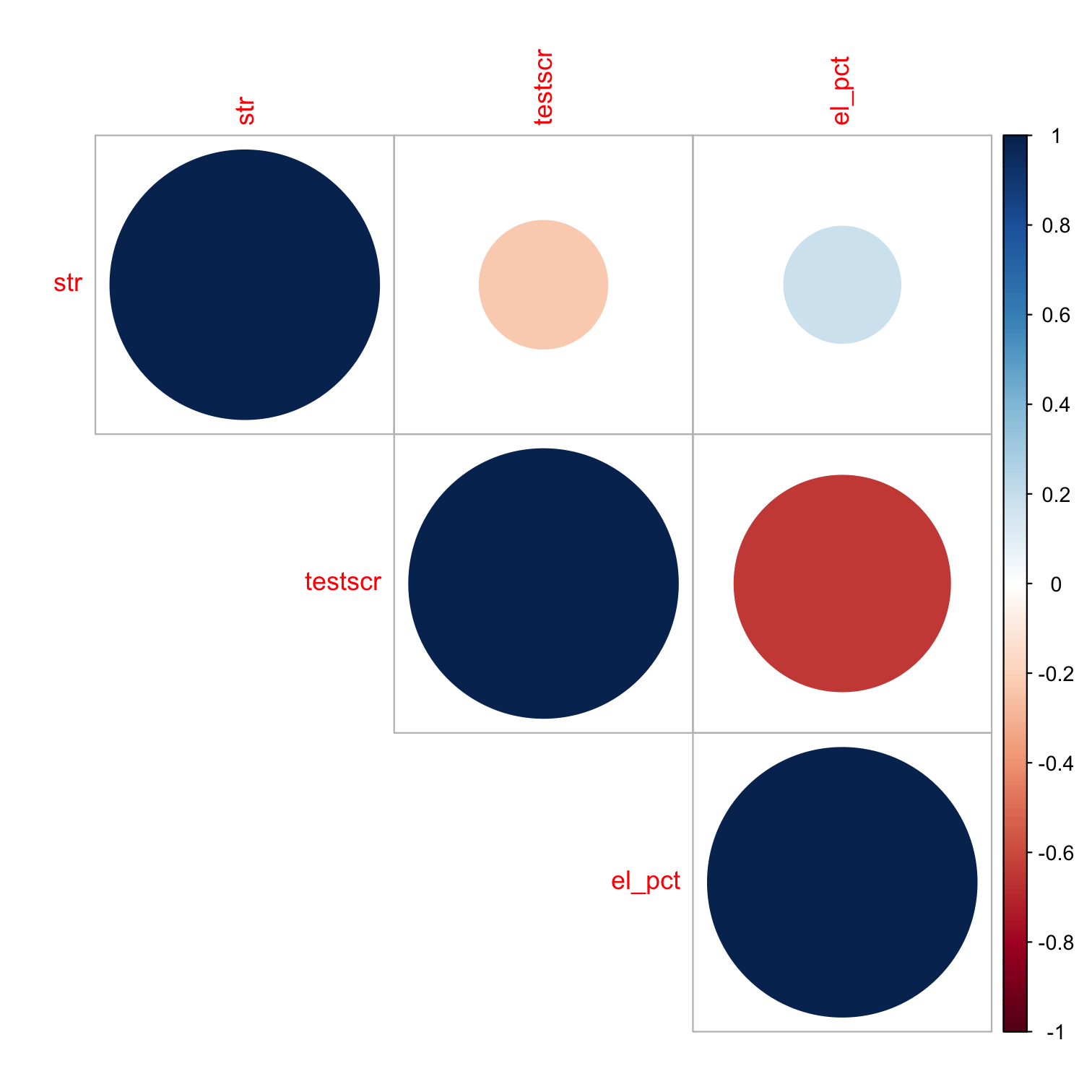

Endogeneity and Bias: Correlations I

- Here is where checking correlations between variables helps:

# Select only the three variables we want (there are many)CAcorr <- CASchool %>% select("str","testscr","el_pct")# Make a correlation tablecor_table <- cor(CAcorr)cor_table # look at it## str testscr el_pct## str 1.0000000 -0.2263628 0.1876424## testscr -0.2263628 1.0000000 -0.6441237## el_pct 0.1876424 -0.6441237 1.0000000el_pctis strongly (negatively) correlated withtestscr(Condition 1)el_pctis reasonably (positively) correlated withstr(Condition 2)

Endogeneity and Bias: Correlations II

- Here is where checking correlations between variables helps:

# Make a correlation plotlibrary(corrplot)corrplot(cor_table, type="upper", method = "circle", order="original")

el_pctis strongly correlated withtestscr(Condition 1)el_pctis reasonably correlated withstr(Condition 2)

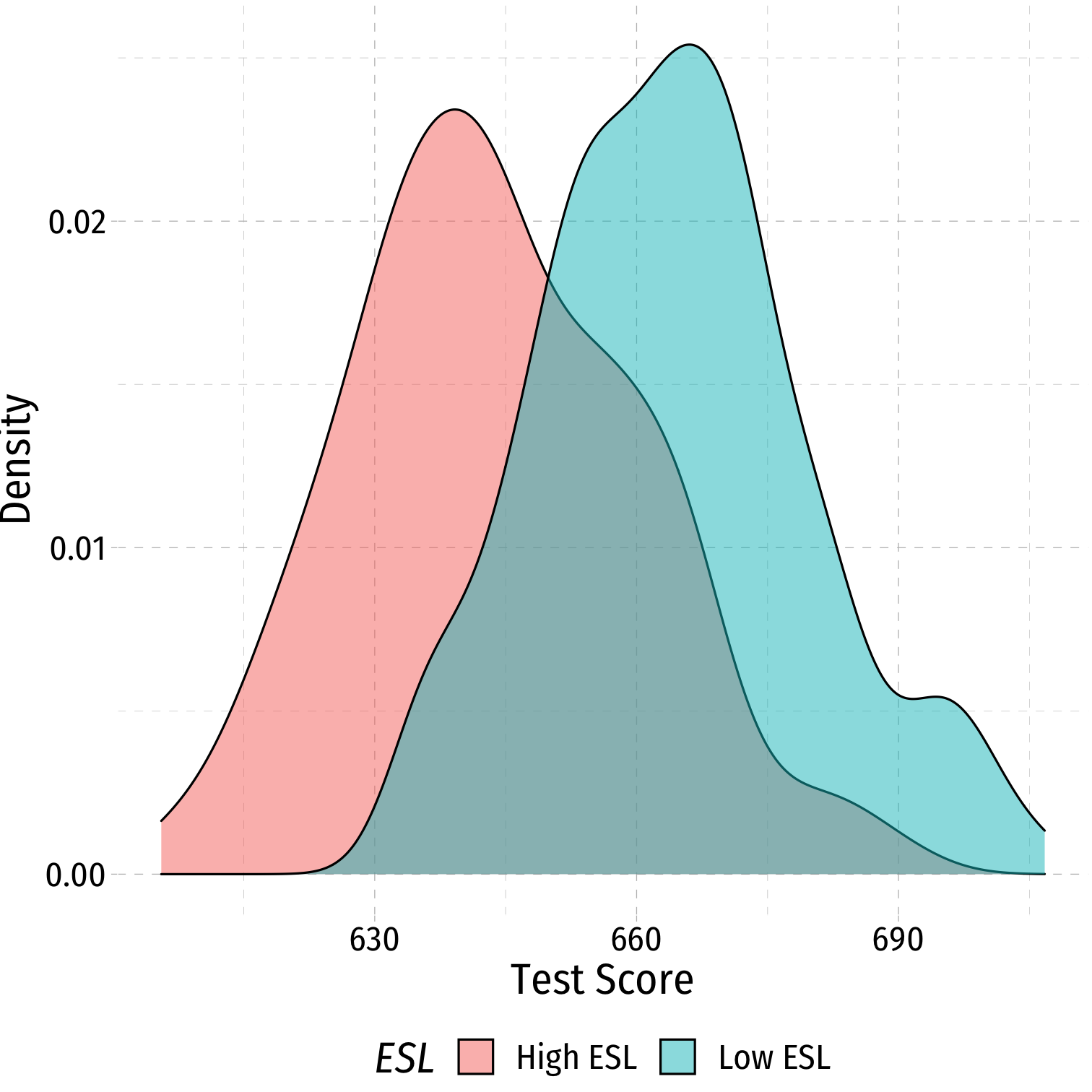

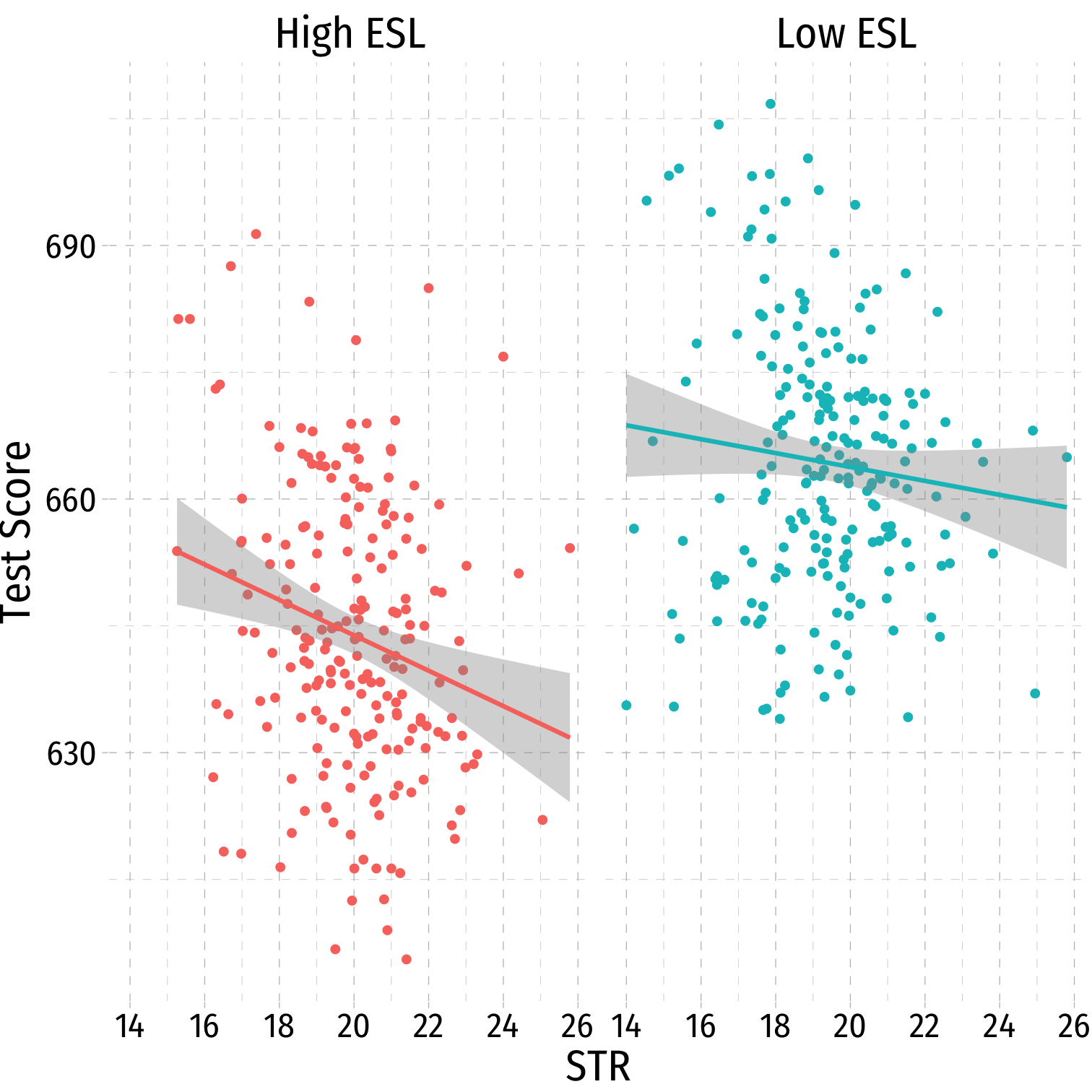

Look at Conditional Distributions I

# make a new variable called EL# = high (if el_pct is above median) or = low (if below median)CASchool <- CASchool %>% # next we create a new dummy variable called ESL mutate(ESL = ifelse(el_pct > median(el_pct), # test if ESL is above median yes = "High ESL", # if yes, call this variable "High ESL" no = "Low ESL")) # if no, call this variable "Low ESL"# get average test score by high/low ELCASchool %>% group_by(ESL) %>% summarize(Average_test_score = mean(testscr))| ABCDEFGHIJ0123456789 |

ESL <chr> | Average_test_score <dbl> | |||

|---|---|---|---|---|

| High ESL | 643.9591 | |||

| Low ESL | 664.3540 |

Look at Conditional Distributions II

ggplot(data = CASchool)+ aes(x = testscr, fill = ESL)+ geom_density(alpha=0.5)+ labs(x = "Test Score", y = "Density")+ ggthemes::theme_pander( base_family = "Fira Sans Condensed", base_size=20 )+ theme(legend.position = "bottom")

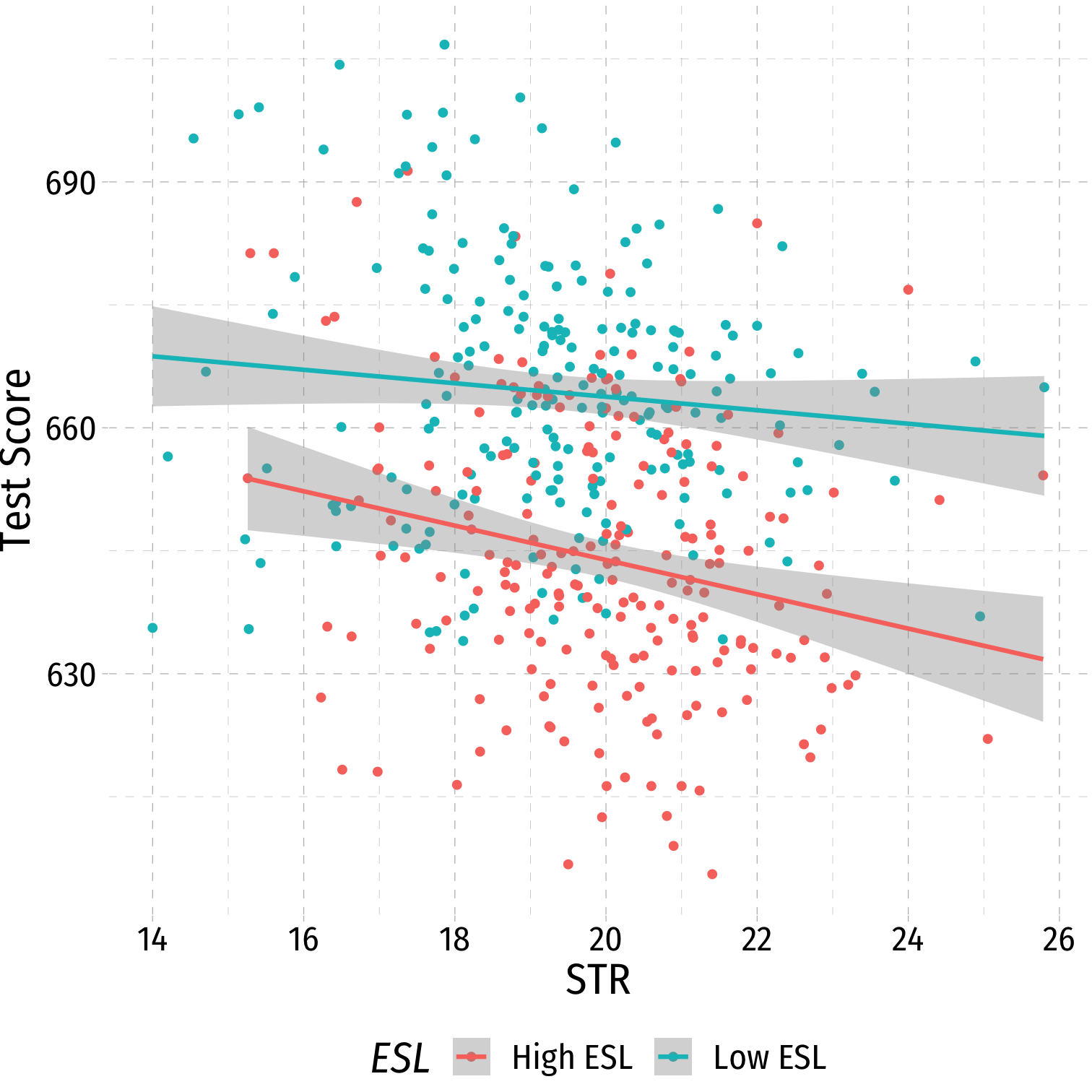

Look at Conditional Distributions III

esl_scatter <- ggplot(data = CASchool)+ aes(x = str, y = testscr, color = ESL)+ geom_point()+ geom_smooth(method = "lm")+ labs(x = "STR", y = "Test Score")+ ggthemes::theme_pander( base_family = "Fira Sans Condensed", base_size=20 )+ theme(legend.position = "bottom")esl_scatter

Look at Conditional Distributions III

esl_scatter+ facet_grid(~ESL)+ guides(color = F)

Omitted Variable Bias in the Class Size Example

E[^β1]=β1+bias

cor(STR,u) is positive (via %EL)

cor(u,Test score) is negative (via %EL)

β1 is negative (between Test score and STR)

Bias is positive

- But since β1 is negative, it’s made to be a larger negative number than it truly is

- Implies that β1 overstates the effect of reducing STR on improving Test Scores

Omitted Variable Bias: Messing with Causality I

- If school districts with higher Test Scores happen to have both lower STR AND districts with smaller STR sizes tend to have less %EL ...

Omitted Variable Bias: Messing with Causality I

If school districts with higher Test Scores happen to have both lower STR AND districts with smaller STR sizes tend to have less %EL ...

How can we say ^β1 estimates the marginal effect of ΔSTR→ΔTest Score?

(We can’t.)

Omitted Variable Bias: Messing with Causality II

Consider an ideal random controlled trial (RCT)

Randomly assign experimental units (e.g. people, cities, etc) into two (or more) groups:

- Treatment group(s): gets a (certain type or level of) treatment

- Control group(s): gets no treatment(s)

Compare results of two groups to get average treatment effect

RCTs Neutralize Omitted Variable Bias I

Example: Imagine an ideal RCT for measuring the effect of STR on Test Score

School districts would be randomly assigned a student-teacher ratio

With random assignment, all factors in u (%ESL students, family size, parental income, years in the district, day of the week of the test, climate, etc) are distributed independently of class size

RCTs Neutralize Omitted Variable Bias II

Example: Imagine an ideal RCT for measuring the effect of STR on Test Score

Thus, cor(STR,u)=0 and E[u|STR]=0, i.e. exogeneity

Our ^β1 would be an unbiased estimate of β1, measuring the true causal effect of STR → Test Score

But We Rarely, if Ever, Have RCTs

But we didn't run an RCT, it's observational data!

“Treatment” of having a large or small class size is NOT randomly assigned!

%EL: plausibly fits criteria of O.V. bias!

- %EL is a determinant of Test Score

- %EL is correlated with STR

Thus, “control” group and “treatment” group differ systematically!

- Small STR also tend to have lower %EL; large STR also tend to have higher %EL

- Selection bias: cor(STR,%EL)≠0, E[ui|STRi]≠0

Treatment Group

Treatment Group

Control Group

Control Group

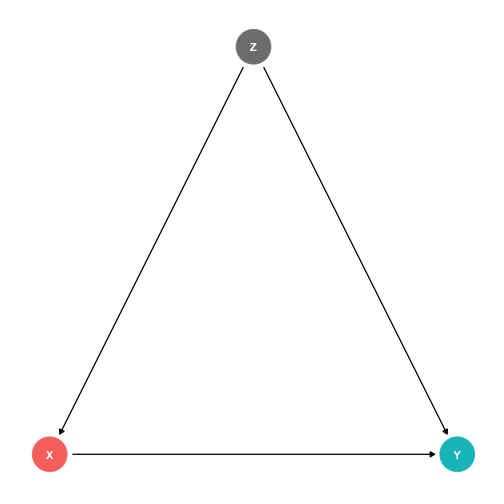

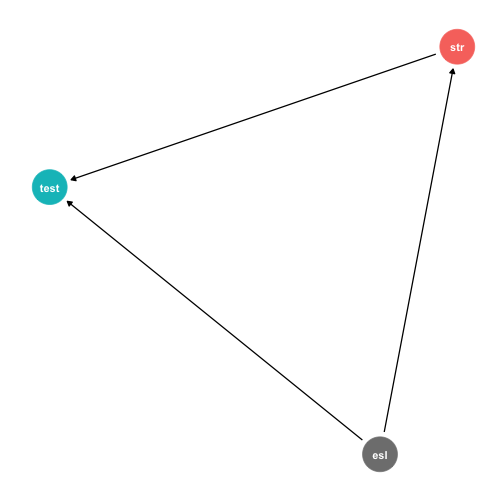

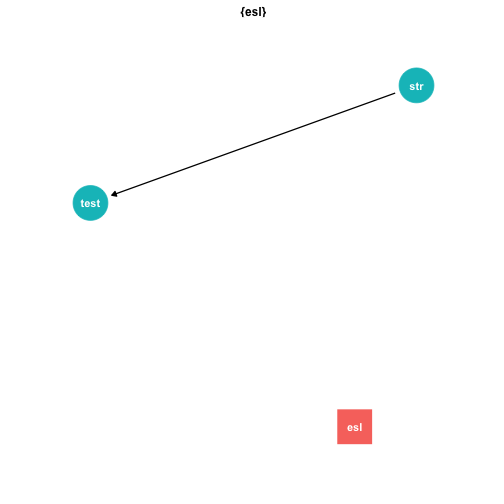

Another Way to Control for Variables

- Pathways connecting str and test score:

- str → test score

- str ← ESL → testscore

Another Way to Control for Variables

Pathways connecting str and test score:

- str → test score

- str ← ESL → testscore

DAG rules tell us we need to control for ESL in order to identify the causal effect of str → test score

So now, how do we control for a variable?

Controlling for Variables

Look at effect of STR on Test Score by comparing districts with the same %EL

- Eliminates differences in %EL between high and low STR classes

- “As if” we had a control group! Hold %EL constant

The simple fix is just to not omit %EL!

- Make it another independent variable on the righthand side of the regression

Treatment Group

Treatment Group

Control Group

Control Group

Controlling for Variables

Look at effect of STR on Test Score by comparing districts with the same %EL

- Eliminates differences in %EL between high and low STR classes

- “As if” we had a control group! Hold %EL constant

The simple fix is just to not omit %EL!

- Make it another independent variable on the righthand side of the regression

The Multivariate Regression Model

Multivariate Econometric Models Overview

Yi=β0+β1X1i+β2X2i+⋯+βkXki+ui

Multivariate Econometric Models Overview

Yi=β0+β1X1i+β2X2i+⋯+βkXki+ui

- Y is the dependent variable of interest

- AKA "response variable," "regressand," "Left-hand side (LHS) variable"

Multivariate Econometric Models Overview

Yi=β0+β1X1i+β2X2i+⋯+βkXki+ui

- Y is the dependent variable of interest

- AKA "response variable," "regressand," "Left-hand side (LHS) variable"

- X1 and X2 are independent variables

- AKA "explanatory variables", "regressors," "Right-hand side (RHS) variables", "covariates"

Multivariate Econometric Models Overview

Yi=β0+β1X1i+β2X2i+⋯+βkXki+ui

- Y is the dependent variable of interest

- AKA "response variable," "regressand," "Left-hand side (LHS) variable"

- X1 and X2 are independent variables

- AKA "explanatory variables", "regressors," "Right-hand side (RHS) variables", "covariates"

- Our data consists of a spreadsheet of observed values of (X1i,X2i,Yi)

Multivariate Econometric Models Overview

Yi=β0+β1X1i+β2X2i+⋯+βkXki+ui

- Y is the dependent variable of interest

- AKA "response variable," "regressand," "Left-hand side (LHS) variable"

- X1 and X2 are independent variables

- AKA "explanatory variables", "regressors," "Right-hand side (RHS) variables", "covariates"

- Our data consists of a spreadsheet of observed values of (X1i,X2i,Yi)

- To model, we "regress Y on X1 and X2"

Multivariate Econometric Models Overview

Yi=β0+β1X1i+β2X2i+⋯+βkXki+ui

- Y is the dependent variable of interest

- AKA "response variable," "regressand," "Left-hand side (LHS) variable"

- X1 and X2 are independent variables

- AKA "explanatory variables", "regressors," "Right-hand side (RHS) variables", "covariates"

- Our data consists of a spreadsheet of observed values of (X1i,X2i,Yi)

- To model, we "regress Y on X1 and X2"

- β0,β1,⋯,βk are parameters that describe the population relationships between the variables

- We estimate k+1 parameters (“betas”)†

† Note Bailey defines k to include both the number of variables plus the constant.

Marginal Effects I

Yi=β0+β1X1i+β2X2i

- Consider changing X1 by ΔX1 while holding X2 constant:

Y=β0+β1X1+β2X2Before the change

Marginal Effects I

Yi=β0+β1X1i+β2X2i

- Consider changing X1 by ΔX1 while holding X2 constant:

Y=β0+β1X1+β2X2Before the changeY+ΔY=β0+β1(X1+ΔX1)+β2X2After the change

Marginal Effects I

Yi=β0+β1X1i+β2X2i

- Consider changing X1 by ΔX1 while holding X2 constant:

Y=β0+β1X1+β2X2Before the changeY+ΔY=β0+β1(X1+ΔX1)+β2X2After the changeΔY=β1ΔX1The difference

Marginal Effects I

Yi=β0+β1X1i+β2X2i

- Consider changing X1 by ΔX1 while holding X2 constant:

Y=β0+β1X1+β2X2Before the changeY+ΔY=β0+β1(X1+ΔX1)+β2X2After the changeΔY=β1ΔX1The differenceΔYΔX1=β1Solving for β1

Marginal Effects II

β1=ΔYΔX1 holding X2 constant

Marginal Effects II

β1=ΔYΔX1 holding X2 constant

Similarly, for β2:

β2=ΔYΔX2 holding X1 constant

Marginal Effects II

β1=ΔYΔX1 holding X2 constant

Similarly, for β2:

β2=ΔYΔX2 holding X1 constant

And for the constant, β0:

β0=predicted value of Y when X1=0,X2=0

You Can Keep Your Intuitions...But They're Wrong Now

We have been envisioning OLS regressions as the equation of a line through a scatterplot of data on two variables, X and Y

- β0: “intercept”

- β1: “slope”

With 3+ variables, OLS regression is no longer a “line” for us to estimate...

The “Constant”

Alternatively, we can write the population regression equation as: Yi=β0X0i+β1X1i+β2X2i+ui

Here, we added X0i to β0

X0i is a constant regressor, as we define X0i=1 for all i observations

Likewise, β0 is more generally called the “constant” term in the regression (instead of the “intercept”)

This may seem silly and trivial, but this will be useful next class!

The Population Regression Model: Example I

Example:

Beer Consumptioni=β0+β1Pricei+β2Incomei+β3Nachos Pricei+β4Wine Price+ui

- Let's see what you remember from micro(econ)!

The Population Regression Model: Example I

Example:

Beer Consumptioni=β0+β1Pricei+β2Incomei+β3Nachos Pricei+β4Wine Price+ui

Let's see what you remember from micro(econ)!

What measures the price effect? What sign should it have?

The Population Regression Model: Example I

Example:

Beer Consumptioni=β0+β1Pricei+β2Incomei+β3Nachos Pricei+β4Wine Price+ui

Let's see what you remember from micro(econ)!

What measures the price effect? What sign should it have?

What measures the income effect? What sign should it have? What should inferior or normal (necessities & luxury) goods look like?

The Population Regression Model: Example I

Example:

Beer Consumptioni=β0+β1Pricei+β2Incomei+β3Nachos Pricei+β4Wine Price+ui

Let's see what you remember from micro(econ)!

What measures the price effect? What sign should it have?

What measures the income effect? What sign should it have? What should inferior or normal (necessities & luxury) goods look like?

What measures the cross-price effect(s)? What sign should substitutes and complements have?

The Population Regression Model: Example I

Example:

^Beer Consumptioni=20−1.5Pricei+1.25Incomei−0.75Nachos Pricei+1.3Wine Pricei

- Interpret each ˆβ

Multivariate OLS in R

# run regression of testscr on str and el_pctschool_reg_2 <- lm(testscr ~ str + el_pct, data = CASchool)- Format for regression is

lm(y ~ x1 + x2, data = df)yis dependent variable (listed first!)~means “is modeled by” or “is explained by”x1andx2are the independent variabledfis the dataframe where the data is stored

Multivariate OLS in R II

# look at reg objectschool_reg_2## ## Call:## lm(formula = testscr ~ str + el_pct, data = CASchool)## ## Coefficients:## (Intercept) str el_pct ## 686.0322 -1.1013 -0.6498- Stored as an

lmobject calledschool_reg_2, alistobject

Multivariate OLS in R III

summary(school_reg_2) # get full summary## ## Call:## lm(formula = testscr ~ str + el_pct, data = CASchool)## ## Residuals:## Min 1Q Median 3Q Max ## -48.845 -10.240 -0.308 9.815 43.461 ## ## Coefficients:## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 686.03225 7.41131 92.566 < 2e-16 ***## str -1.10130 0.38028 -2.896 0.00398 ** ## el_pct -0.64978 0.03934 -16.516 < 2e-16 ***## ---## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1## ## Residual standard error: 14.46 on 417 degrees of freedom## Multiple R-squared: 0.4264, Adjusted R-squared: 0.4237 ## F-statistic: 155 on 2 and 417 DF, p-value: < 2.2e-16Multivariate OLS in R IV: broom

# load packageslibrary(broom)# tidy regression outputtidy(school_reg_2)| ABCDEFGHIJ0123456789 |

term <chr> | estimate <dbl> | std.error <dbl> | statistic <dbl> | |

|---|---|---|---|---|

| (Intercept) | 686.0322487 | 7.41131248 | 92.565554 | |

| str | -1.1012959 | 0.38027832 | -2.896026 | |

| el_pct | -0.6497768 | 0.03934255 | -16.515879 |

Multivariate Regression Output Table

library(huxtable)huxreg("Model 1" = school_reg, "Model 2" = school_reg_2, coefs = c("Intercept" = "(Intercept)", "Class Size" = "str", "%ESL Students" = "el_pct"), statistics = c("N" = "nobs", "R-Squared" = "r.squared", "SER" = "sigma"), number_format = 2)| Model 1 | Model 2 | |

|---|---|---|

| Intercept | 698.93 *** | 686.03 *** |

| (9.47) | (7.41) | |

| Class Size | -2.28 *** | -1.10 ** |

| (0.48) | (0.38) | |

| %ESL Students | -0.65 *** | |

| (0.04) | ||

| N | 420 | 420 |

| R-Squared | 0.05 | 0.43 |

| SER | 18.58 | 14.46 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||