Overview

Today we start looking at associations between variables, which we will first attempt to quantify with measures like covariance and correlation. Then we turn to fitting a line to data via linear regression. We overview the basic regression model, the parameters and how they are derived, and see how to work with regressions in R with lm and the tidyverse package broom.

We consider an extended example about class sizes and test scores, which comes from a (Stata) dataset from an old textbook that I used to use, Stock and Watson, 2007. Download and follow along with the data from today’s example:1

Slides

Below, you can find the slides in two formats. Clicking the image will bring you to the html version of the slides in a new tab. Note while in going through the slides, you can type h to see a special list of viewing options, and type o for an outline view of all the slides.

The lower button will allow you to download a PDF version of the slides. I suggest printing the slides beforehand and using them to take additional notes in class (not everything is in the slides)!

Assignments

Problem Set 1

Problem Set 2 is due by Tuesday September 21. Please see the instructions for more information on how to submit your assignment (there are multiple ways!).

Math Appendix

Variance

Recall the variance of a discrete random variable , denoted or , is the expected value (probability-weighted average) of the squared deviations of from it’s mean (or expected value) or .2

Fpr continuous data (if all possible values of are equally likely or we don’t know the probabilities), we can write variance as a simple average of squared deviations from the mean:

Variance has some useful properties:

Property 1: The variance of a constant is 0

If a random variable takes the same value (e.g. 2) with probability 1.00, , so the average squared deviation from the mean is 0, because there are never any values other than 2.

Property 2: The variance is unchanged for a random variable plus/minus a constant

Since the variance of a constant is 0.

Property 3: The variance of a scaled random variable is scaled by the square of the coefficient

Property 4: The variance of a linear transformation of a random variable is scaled by the square of the coefficient

Covariance

For two random variables, and , we can measure their covariance (denoted or )3 to quantify how they vary together. A good way to think about this is: when is above its mean, would we expect to also be above its mean (and covary positively), or below its mean (and covary negatively). Remember, this is describing the joint probability distribution for two random variables.

Again, in the case of equally probable values for both and , covariance is sometimes written:

Covariance also has a number of useful properties:

Property 1: The covariance of a random variable and a constant is 0

Property 2: The covariance of a random variable and itself is the variable’s variance

Property 3: The covariance of a two random variables and each scaled by a constant and is the product of the covariance and the constants

Property 4: If two random variables are independent, their covariance is 0

Correlation

Covariance, like variance, is often cumbersome, and the numerical value of the covariance of two random variables does not really mean much. It is often convenient to normalize the covariance to a decimal between and 1. We do this by dividing by the product of the standard deviations of and . This is known as the correlation coefficient between and , denoted or (for populations) or (for samples):

Note this also means that covariance is the product of the standard deviation of and and their correlation coefficient:

Another way to reach the (sample) correlation coefficient is by finding the average joint -score of each pair of :

Correlation has some useful properties that should be familiar to you:

- Correlation is between and 1

- A correlation of -1 is a downward sloping straight line

- A correlation of 1 is an upward sloping straight line

- A correlation of 0 implies no relationship

Calculating Correlation Example

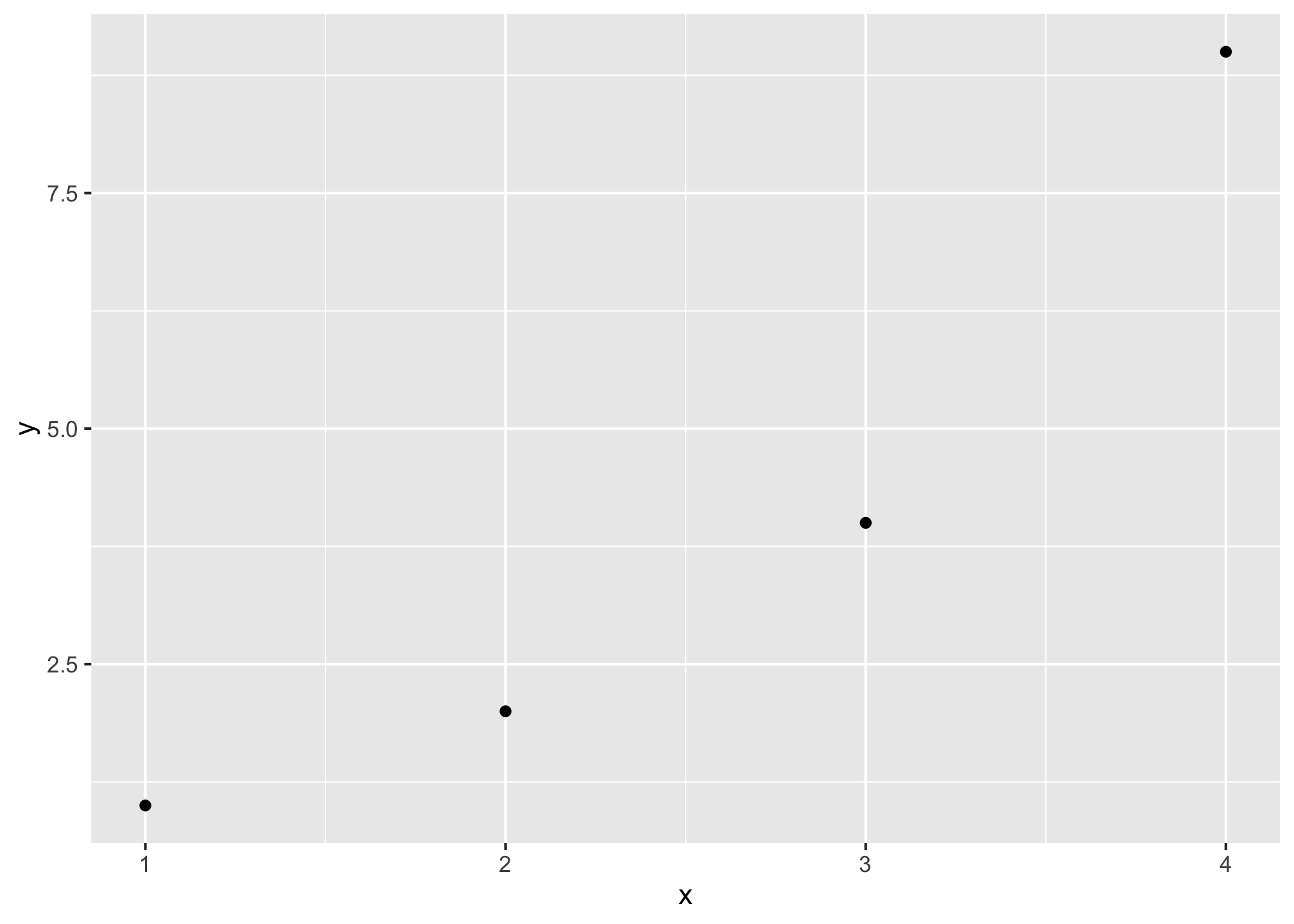

We can calculate the correlation of a simple data set (of 4 observations) using R to show how correlation is calculated. We will use the -score method. Begin with a simple set of data in points:

library(tidyverse)

corr_example<-tibble(x=c(1,2,3,4),

y=c(1,2,4,9))

ggplot(corr_example,aes(x=x,y=y))+geom_point()

corr_example %>%

summarize(mean_x = mean(x), #find mean of x, its 2.5

sd_x = sd(x), #find sd of x, its 1.291

mean_y = mean(y), #find mean of y, its 4

sd_y = sd(y)) #find sd of y, its 3.559## # A tibble: 1 × 4

## mean_x sd_x mean_y sd_y

## <dbl> <dbl> <dbl> <dbl>

## 1 2.5 1.29 4 3.56#take z score of x,y for each pair and multiply them

corr_example <- corr_example %>%

mutate(z_product = ((x-mean(x))/sd(x)) * ((y-mean(y))/sd(y)))

corr_example %>%

summarize(avg_z_product = sum(z_product)/(n()-1), # average z products over n-1

actual_corr = cor(x,y), #compare our answer to actual cor() command!

covariance = cov(x,y)) # just for kicks, what's the covariance? ## # A tibble: 1 × 3

## avg_z_product actual_corr covariance

## <dbl> <dbl> <dbl>

## 1 0.943 0.943 4.33Note this is a

.dtaStata file. You will need to (install and) load the packagehaventoread_dta()Stata files into a dataframe.↩︎Note there will be a different in notation depending on whether we refer to a population (e.g. ) or to a sample (e.g. ). As the overwhelming majority of cases we will deal with samples, I will use sample notation for means).↩︎

Again, to be technically correct, refers to populations, refers to samples, in line with population vs. sample variance and standard deviation. Recall also that sample estimates of variance and standard deviation divide by , rather than . In large sample sizes, this difference is negligible.↩︎