Overview

We begin with some more time for you to work on the R Practice from last class.

This week is about inferential statistics: using statistics calculated from a sample of data to infer the true (and unmeasurable) parameters that describe a population. In doing so, we can run hypothesis tests on our sample to determine a point estimate of a parameter, or construct a confidence interval from our sample to cast a range for the true parameter.

This is standard principles of statistics - you hopefully should have learned it before. If it has been a while (or never) since your last statistics class, this is one of the hardest concepts to understand at first glance. I recommend Khan Academy [From sampling distributions through significance tests, for this. Though the whole class is helpful!] or Google for these concepts, as every statistics class will cover them in the standard way.

That being said, I do not cover inferential statistics in the standard way (see the appendix today below for an overview of the standard way). I think it will be more intuitive if I show you where these concepts come from by simulating a sampling distribution, as opposed to reciting the theoretical sampling distributions.

Readings

- Ch.4 in Bailey, Real Econometrics

- Ch. 10 (optionally 8-9) in Modern Dive

- Visualizing Probability and Inference

Bailey teaches inferential statistics in the classical way (with reference to theoretical and distributions, and and tests). This is all valid. Again, you may wish to brush up with Khan Academy [From sampling distributions through significance tests, for this. Though the whole class is helpful!].

The latter “book” (also free online, like R4DS) uses the infer package to run simulations for inferential statistics. Chapter 10 is focused on regression (but I also recommend the chapters leading up to it, which are on inferential statistics broadly, using this method).

The final link is a great website for visualizing basic statistic concepts like probability, distributions, confidence intervals, hypothesis tests, central limit theorem, and regression.

Slides

Below, you can find the slides in two formats. Clicking the image will bring you to the html version of the slides in a new tab. Note while in going through the slides, you can type h to see a special list of viewing options, and type o for an outline view of all the slides.

The lower button will allow you to download a PDF version of the slides. I suggest printing the slides beforehand and using them to take additional notes in class (not everything is in the slides)!

R Practice

Today you will be working on R practice problems on regression. Answers will be posted later on that page.

Assignments

Problem Set 3 Due Thurs Oct 7

Problem Set 3 is due by the end of the day on Thursday, October 7.

Appendix

Differences Between What We Learned in Class and Classical Statistics

In class, you learned the basics behind inferential statistics–p-values, confidence intervals, hypothesis testing–via empirical simulation of many samples permuted from our existing data. We took our sample, ran 1,000 simulations by permutation of our sample without replacement1, calculated the statistic (, the slope) of each simulation; this gave us a (sampling) distribution of our sample statistics, and then found the probability on that distribution that we would observe our actual statistic in our actual data – this is the -value.

Classically, before the use of computers that could run and visualize 1,000s of simulations within seconds,2 inferential statistics was taught using theoretical distributions. Essentially, we calculate a test-statistic by normalizing our finding against a theoretical (null) sampling distribution of our sample statistic, and find p-values by estimating the probability of observing that statistic on that theoretical distribution. These distributions are almost always normal or normal-like distributions. The distribution that we almost always use in econometrics is the (Student’s) -distribution.

Furthermore, testing the null hypothesis , is not the only type of hypothesis test, nor is the slope the only statistic we can test. In fact, there are many different types of hypothesis tests that are well-known and well-used, we focused entirely on regression (since that is the largest tool of the course).

This appendix will give you more background on the theory of inferential statistics, and is more in line with what you may have learned in earlier statistics courses.

Inferential Statistics Basics

It is important to remember several statistical distributions, tools, and facts. Most of them have to do with the normal distribution. If is normally distributed with mean and standard deviation :

library(tidyverse)## ── Attaching packages ─────────────────────────────────────── tidyverse 1.3.1 ──## ✓ ggplot2 3.3.5 ✓ purrr 0.3.4

## ✓ tibble 3.1.4 ✓ dplyr 1.0.7

## ✓ tidyr 1.1.3 ✓ stringr 1.4.0

## ✓ readr 2.0.0 ✓ forcats 0.5.1## ── Conflicts ────────────────────────────────────────── tidyverse_conflicts() ──

## x dplyr::filter() masks stats::filter()

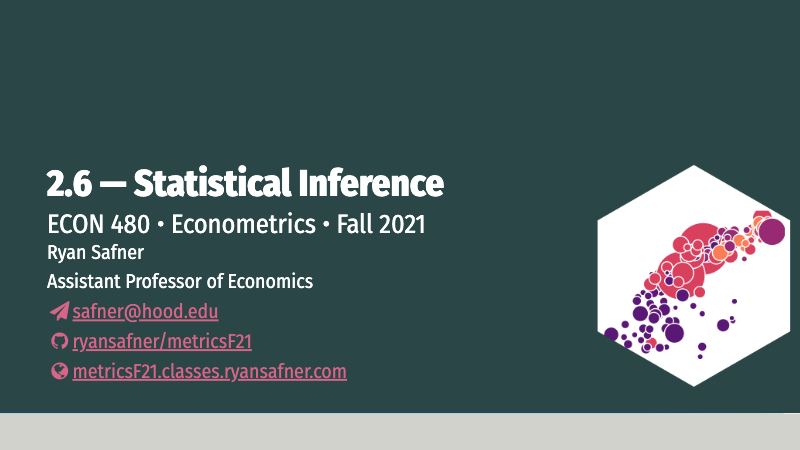

## x dplyr::lag() masks stats::lag()# plot a theoretical normal distribution without any data

# our "geom" here is the "stat_function()" command, which draws statistical functions

normal.pdf <- ggplot(data = data.frame(x = -4:4), # our "data" is just a sequence of x from -4 to 4

aes(x = x))+

stat_function(fun = dnorm, # dnorm is the normal distributions

args = list(mean = 0, sd = 1), # set mean = 0 and sd = 1

color="blue")+

scale_x_continuous(breaks = seq(-4,4,1))+

xlab("X (or Z-score)")+ylab("p(X)")+

theme_light()

normal.pdf

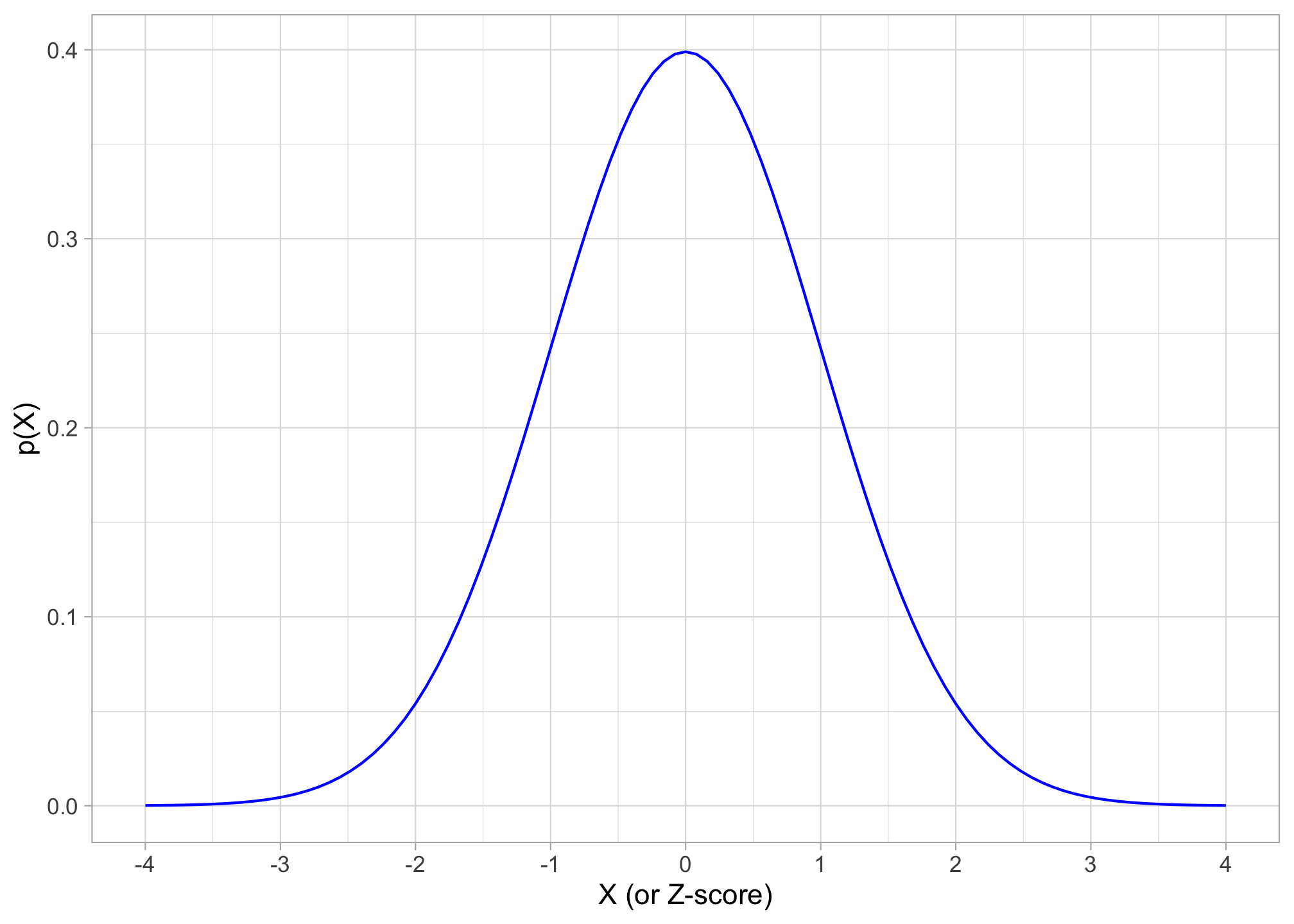

Then recall the 68-95-99.7 empirical rule, that:

Again, in English: “68% of the observations fall within 1 standard deviation of the mean; 95% fall within 2 standard deviations of the mean, and 99.7% fall within 3 standard deviations of the mean.”

If we have the standard normal distribution with mean 0 and standard deviation 1:

Again, we can standardize any normally-distributed random variable by finding the Z-score of each observation:

This ensures the mean will be 0 and standard deviation will be 1. Thus, is the number of standard deviations above or below the mean an observation is.

We can use -scores to find the probability of any range of observations of occuring in the distribution.

pnorm(-2, mean = 0, sd = 1, lower.tail = TRUE) # area to left of -2## [1] 0.02275013pnorm(2, mean = 0, sd = 1, lower.tail = TRUE) # area to left of 2## [1] 0.9772499pnorm(2, mean = 0, sd = 1, lower.tail = FALSE) # area to RIGHT of 2## [1] 0.02275013pnorm(2, mean = 0, sd = 1, lower.tail = TRUE)- pnorm(-2, mean = 0, sd = 1, lower.tail = TRUE) # area between -2 and 2## [1] 0.9544997The Central Limit Theorem

Inferential statistics can be summarized in 2 sentences:

There are unknown parameters that describe a population distribution that we want to know. We use statistics that describe a sample to estimate the population parameters.

Recall there is an element of randomness in our sample statistics due to sampling variability. For example, if we take the mean of one sample, , and then take the mean of a different sample, the ’s will be slightly different. We can conceive of a distribution of ’s across many different samples, and this is called the sampling distribution of the statistic .

Via the sampling distribution, the sample statistic itself is distributed with

- mean (the true population mean)

- standard deviation

Central Limit Theorem: with large enough sample size (), the sampling distribution of a sample statistic is approximately normal3

Thus, the sampling distribution of the sample mean ():

The second term we call the standard error of the sample mean4. Note that it takes the true standard deviation of the population ()5 and divides it by the square root of the sample size, .

Thus if we know the true population standard deviation () then we can simply use the normal distribution for confidence intervals and hypothesis tests of a sample statistic. Since we often do not, we need to use another distribution for inferential statistics, often the -distribution.

If We Don’t Know : The Student’s -Distribution

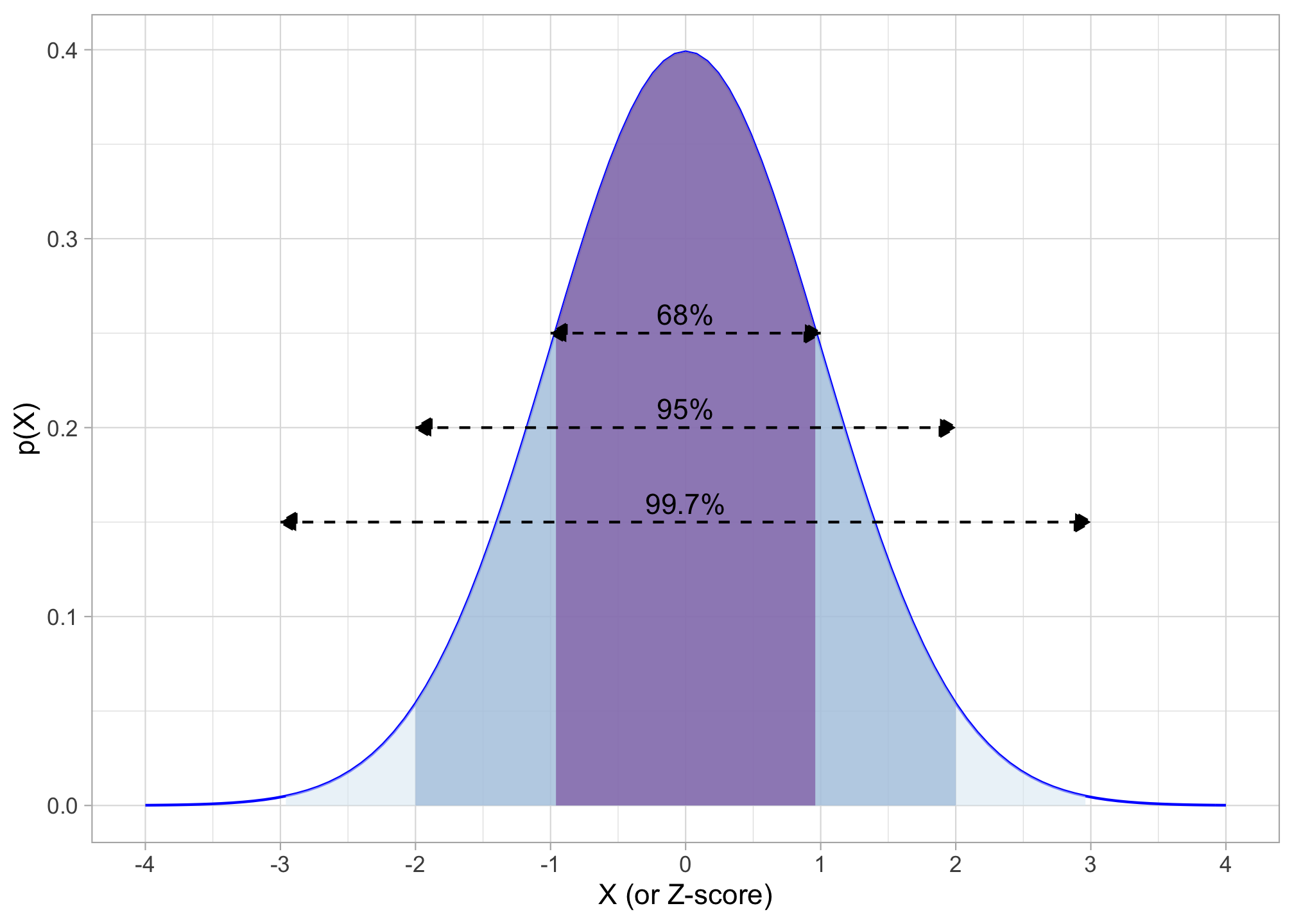

We rarely, if ever, know the true population standard deviation for variable , . Additionally, we sometimes have sample sizes of . If either of these conditions are true, we cannot use leverage the Central Limit Theorem and simplify with a standard normal distribution. Instead of the normal distribution, we use a Student’s t-Distribution6

is functionally equivalent to the idea of a -score, with some slight modifications:

- is our estimated statistic (e.g. sample mean) - is the true population parametner (e.g. population mean) - is the sample standard deviation - is the sample size

-scores similarly measure the number of standard deviations an observation is above or below the mean.

The other main difference between normal distributions/-scores and distributions /-scores is that distributions have degrees of freedom.7

## Loading required package: viridisLite

The standard -distribution looks normal-ish, with a mean of 0, but with more area in the tails of the distribution. The exact shape depends on the degrees of freedom . As , , the -distribution approximates a normal distribution.

By convention, in regression we always use -distributions for confidence intervals and hypothesis tests. For nearly all of the confidence intervals and hypothesis tests below, we functionally replace with .

Confidence Intervals

A confidence interval describes the range of estimates for a population parameter in the form:

Our confidence level is

- again is the “significance level”, the probability that the true population parameter is not within our confidence interval8

- Typical confidence levels: 90%, 95%, 99%

A confidence interval tells us that if we were to conduct many samples, ()% would contain the true population parameter within the interval

To construct a confidence interval, we do the following:

Calculate the sample statistic.

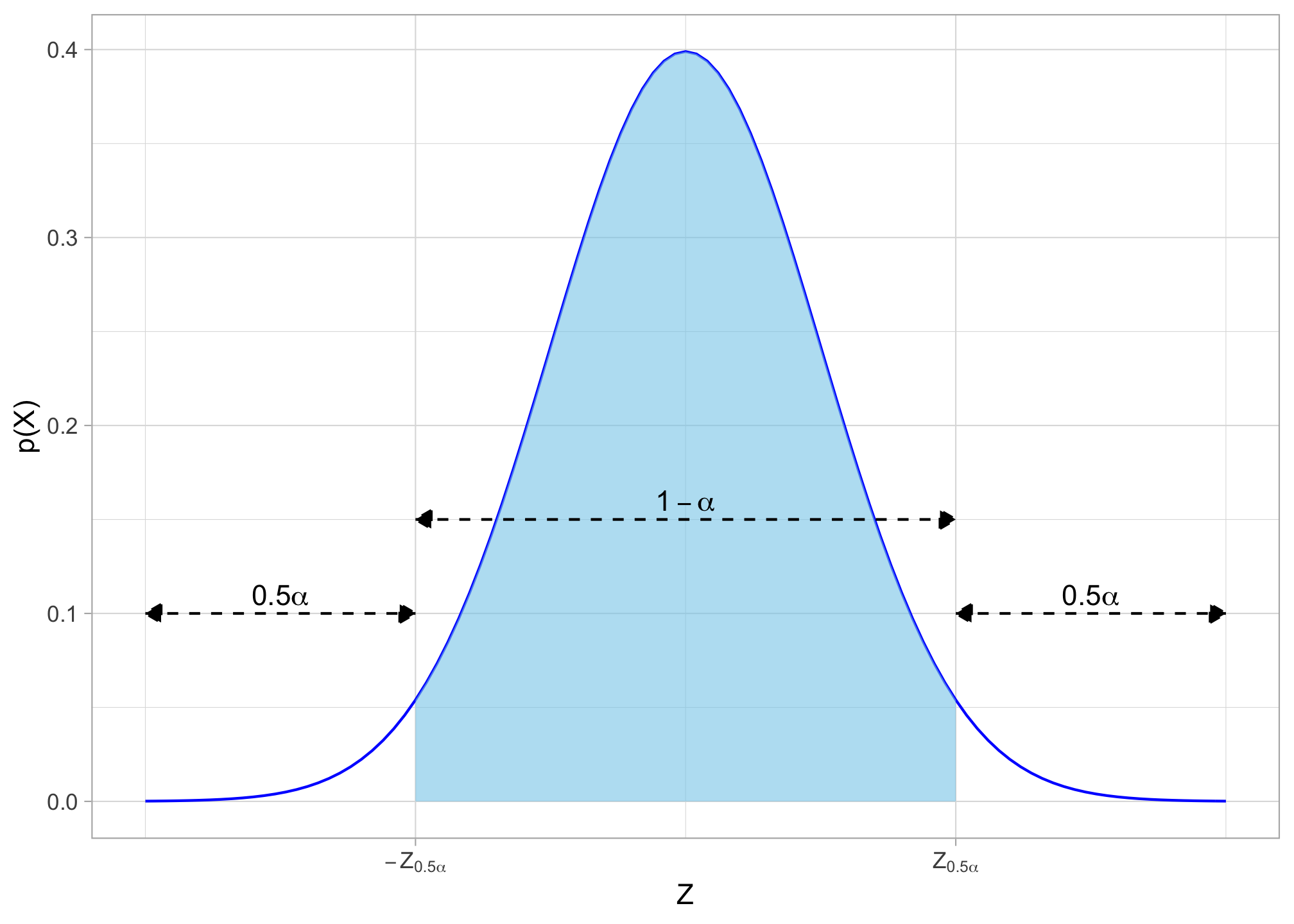

Find -score that corresponds to desired confidence level.9 We need to find what are called the “critical values” of , which we will call on the normal distribution that puts () probability between and in each of the tails of the distribution. The distribution would look like this:

## Warning in is.na(x): is.na() applied to non-(list or vector) of type

## 'expression'

## Warning in is.na(x): is.na() applied to non-(list or vector) of type

## 'expression'

## Warning in is.na(x): is.na() applied to non-(list or vector) of type

## 'expression'

- The confidence interval between the two -scores and contains the desired of observations

- The area beyond each -score contains % of observations in each direction, for a total of % beyond the critical values of

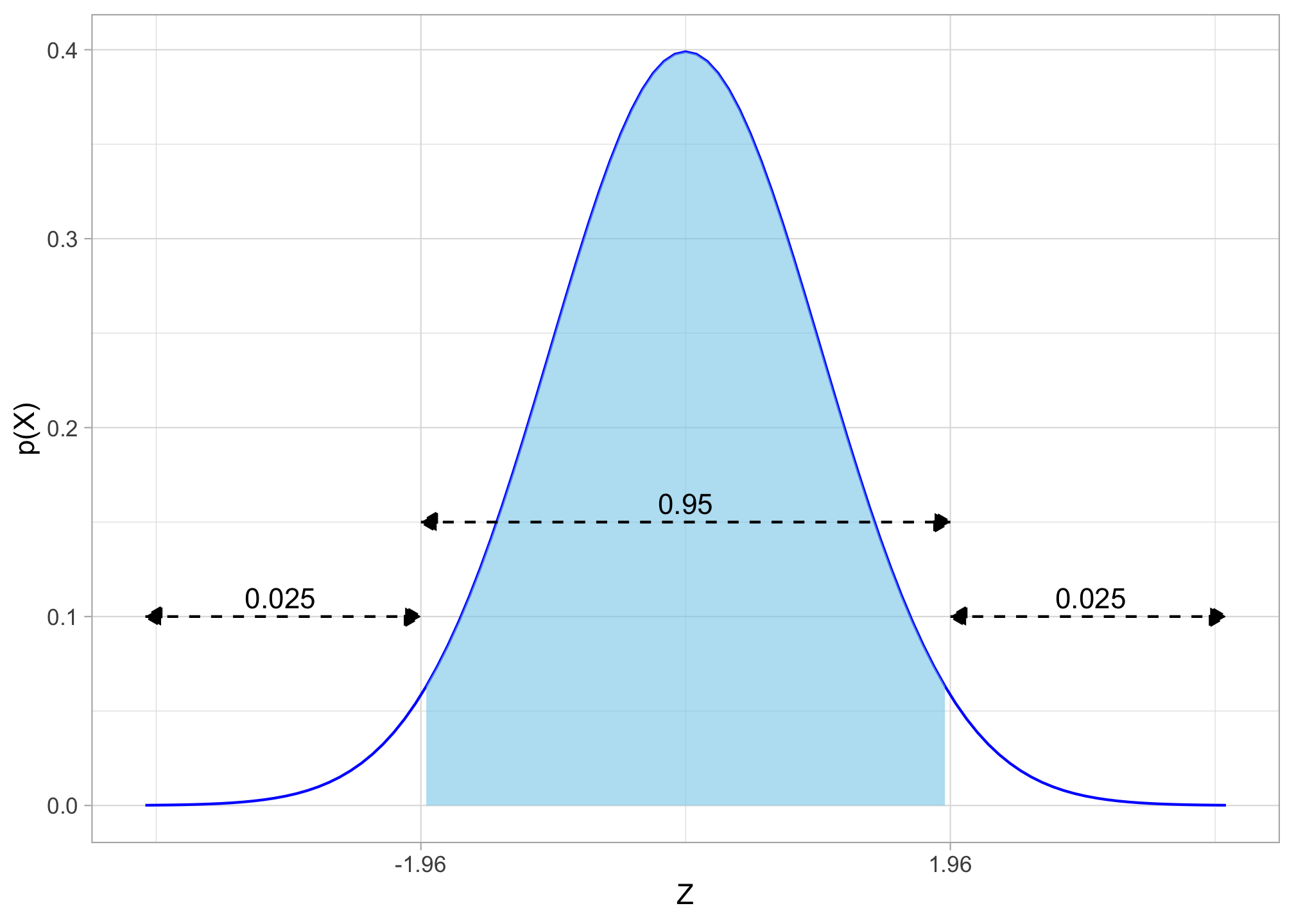

Note that the image above is abstract. So for example, if we wanted a (typical) 95% confidence interval with , the critical value(s) of are 10, and looking on the distribution:

The critical values of are often given in -tables, which you can find in classical statistics textbooks or online. Critical values of for common confidence intervals values are well known:

| Confidence Level | ||

|---|---|---|

| 90% | 0.10 | |

| 95% | 0.05 | |

| 99% | 0.01 |

- Calculate the margin of error (MOE)

The margin of error is the critical value of times the standard error of the estimate ().

- Construct the confidence interval

The confidence interval is simply our estimate plus and minus the margin of error.

- Intepret the confidence interval in the context of the problem

“We estimate with [1-alpha]% confidence that the true [population parameter] is between [lowerbound] and [upperbound]”.

That is, for each simulation, we randomly selected observations from our existing sample to be in the simulation, and then did not put that observation back in the pool to possibly be selected again.↩︎

Even when I was in graduate school, 2011–2015↩︎

If samples are i.i.d. (independently and identically distributed if they are drawn from the same population randomly and then replaced) we don’t even need to know the population distribution to assume normality↩︎

Instead of the “standard deviation”. “Standard error” refers to the sampling variability of a sample statistic, and is always talking about a sampling distribution.↩︎

Which we need to know! We often do not know it!↩︎

“Student” was the penname of William Sealy Gosset, who has one of the more interesting stories in statistics. He worked for Guiness in Ireland testing the quality of beer. He found that with small sample sizes, normal distributions did not yield accurate results. He came up with a more accurate distribution, and since Guiness would not let him publish his findings, published it under the pseudonym of “Student.”↩︎

Degrees of freedom, are the number of independent values used for the calculation of a statistic minus the number of other statistics used as intermediate steps. For sample standard deviation , we use deviations and 1 parameter , hence ↩︎

Equivalently, is the probability of a Type I error: a false positive finding where we incorrectly reject a null hypothesis when it the null hypothesis is in fact true.↩︎

Of course, if we don’t know the population , we need to use the -distribution and find critical -scores instead of -scores. See above.↩︎

Note this is the precise value behind the rule of thumb that 95% of observations fall within 2 standard deviations of the mean!↩︎